VS Code with Code Llama local using Continue

Can we stop paying for Copilot yet?

Short answer: No.

There’s a lot of benefits to running your Coding LLM AI locally such as:

Privacy - Not sharing your code with anyone

Security - Your code doesn’t leave your computer

Speed - Possibly your rig is faster than hitting the server (… probably not today though)

With the launch of Code Llama by Meta, we have an LLM that is commercially usable for free so it seemed like the time to try everything out. There’s also Continue VS Code plugin that provides the code suggestions by talking to the LLM.

My setup:

Ubuntu 22.04

RTX 3070 8GB VRAM (Optional for hardware acceleration)

SSD - something Gen4

Pre-reqs:

This was actually a bit of a pain in the ass to get working since about 6 days again main of the dependant libraries including llama.cpp dropped support for ggml models in favor of gguf without backwards compatibility and without updating their documentation. These are free projects that are in experimental phases, so that’s ok, but it’s really confusing if you’re new to this all.

The steps to get everything setup (Hopefully complete, lmk in the comments):

(Missing steps would be from a few pivots of approaches along the way as I hit roadblocks.)

Move to wherever you want to work from. Then I created a new conda env:

conda create -n myenv

conda activate myenvI likely missed some libraries in this next step. Please add any that it tells you are missing when you run other commands.

pip install huggingface_hub joblib llama-cpp-python[server]This step will vary based on your hardware setup. With Cuda properly setup locally (not with Nvidia Container Toolkit) this worked for me. Look at the installation to see if it actually used your GPU it will be obvious if it did from the output.

CMAKE_ARGS="-DLLAMA_CUBLAS=on" FORCE_CMAKE=1 pip install llama-cpp-python --no-cache-dir --verboseIf that didn’t work use this and try again with different commands: pip uninstall -y llama-cpp-python

Download a model, I used a script that put it where huggingface expect it for reuse with some other frameworks. (This could take a while, you can move on while this is downloading.)

from huggingface_hub import hf_hub_download

import joblib

REPO_ID = "TheBloke/CodeLlama-13B-GGUF"

FILENAME = "codellama-13b.Q5_K_M.gguf"

model = joblib.load(

hf_hub_download(repo_id=REPO_ID, filename=FILENAME)

)I used tree to quickly find the path to the model like this

tree ~/.cache/huggingface/hub/models--TheBloke--CodeLlama-13B-GGUF The model is under blobs.

You can start the model server with this command substituting in your model and adjusting how many gpu layers you see fit. (You can remove this and run it without GPU acceleration too).

python -m llama_cpp.server --model ~/.cache/huggingface/hub/models--TheBloke--CodeLlama-13B-GGUF/blobs/4e29b05fa957327b0fcd7e99cccc7e0fe1d5f84a1794f9a21827d37fbfd39114 --n_gpu_layers 16I installed Continue.continue in VS Code from the marketplace.

Add a config to Continue to tell it where the server is https://continue.dev/docs/customization#local-models-with-ggml. The GGML setting here is what works for GGUF as well since none of that exists at the time of writing.

Make sure you select GGML on the Continue tab and then start praying that it finds your server.

Try triggering the code completion from this tab with /edit or /comment.

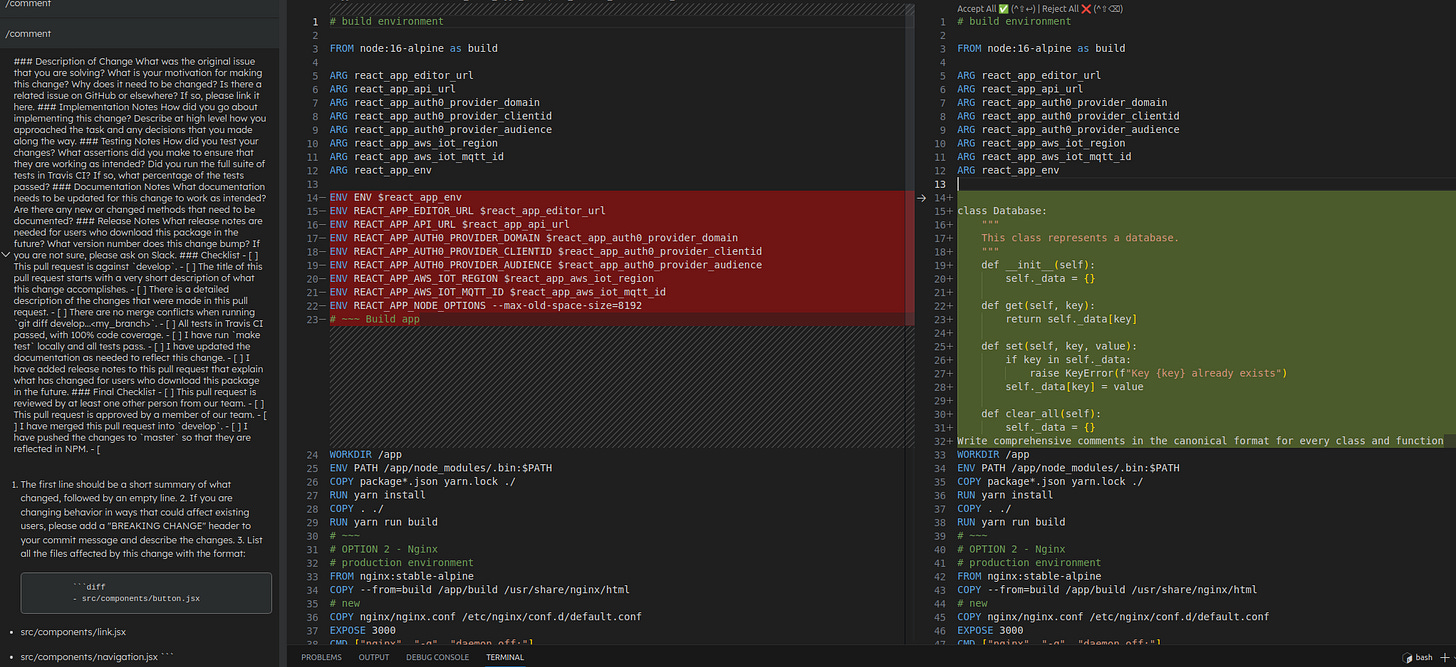

It really did not understand how to comment on this Dockerfile. I mean, idk what I wanted it to say, but this was just bizarre to put some python into it.

Update - better prompt splitting

I found a post somewhere explaining how if some libraries are missing then the llm will respond more slowly and in a format that the prompt doesn’t split correctly sometimes which I found to happen to me.

The fix:

pip install fastchat flask_corsThen restart the server and Continue by disable/enable-ing it. The results aren’t much better.

Conclusion

This is not ready for prime time on my consumer hardware. I’ll just fork out the change for Copilot for a while longer.

The code completion is very slow (minutes long) and useless.

Please let me know if you had better luck in the comments. Hopefully this helps you navigate the setup better for your experiments!